SimplAI April Release: New Tools to Accelerate Multi-Agent AI Development

We’re excited to roll out the latest updates to the SimplAI Platform, designed to further enhance your Multi-Agentic No-Code/Low-Code AI workflow development experience. This release brings powerful tools to structure LLM responses, access external data, and accelerate ingestion at scale

🌟 New Features

🧩 LLM Response Format – JSON Schema Builder

Structure your LLM responses with precision using our JSON Schema Builder. This tool allows you to define custom schemas that instruct the LLM to respond in a consistent, structured format—ideal for downstream workflows and integrations.

📞 Outbound Calling Tool (Pre-built Tool Step)

SimplAI now includes a built-in tool step for outbound voice calling. Use it to create AI Calling Agents capable of initiating and managing phone conversations, driven entirely by intelligent workflows.

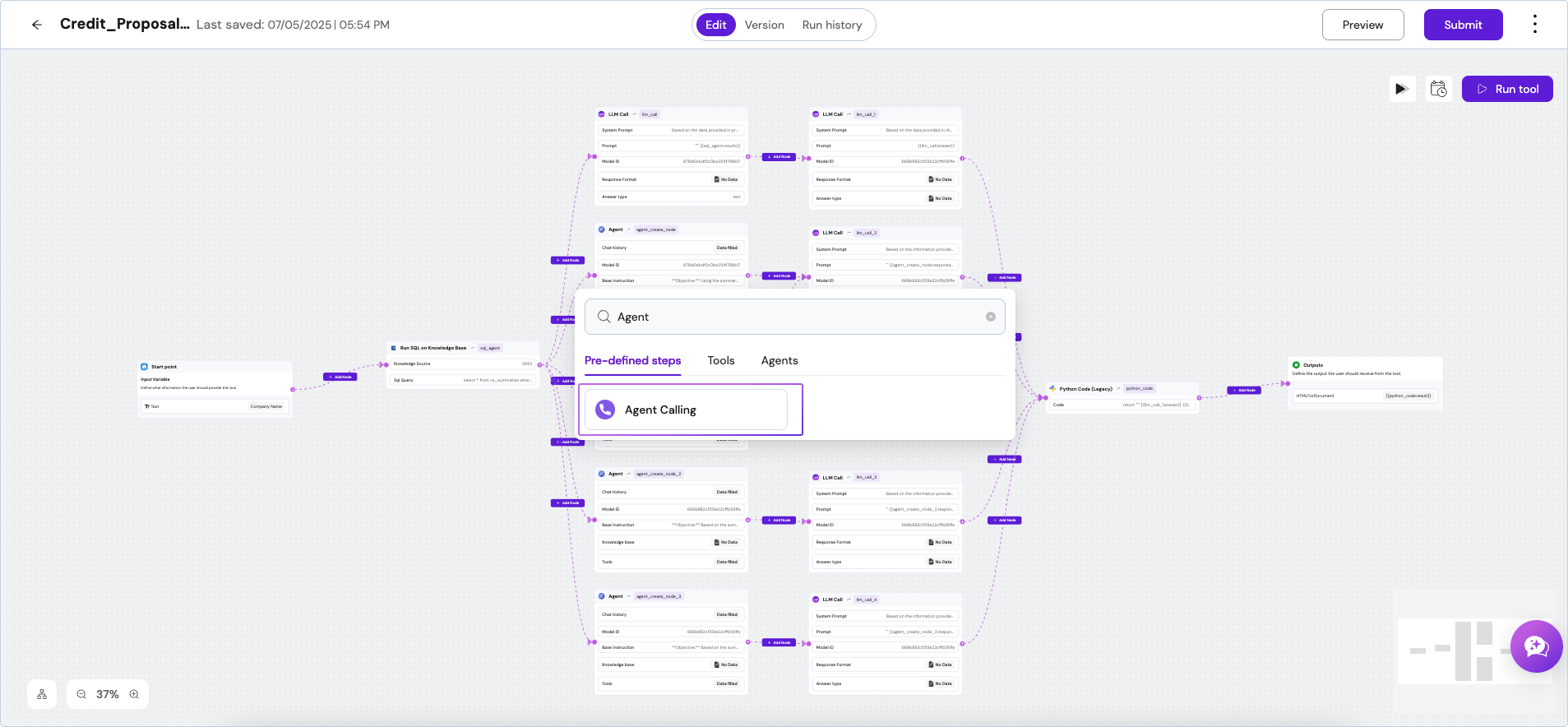

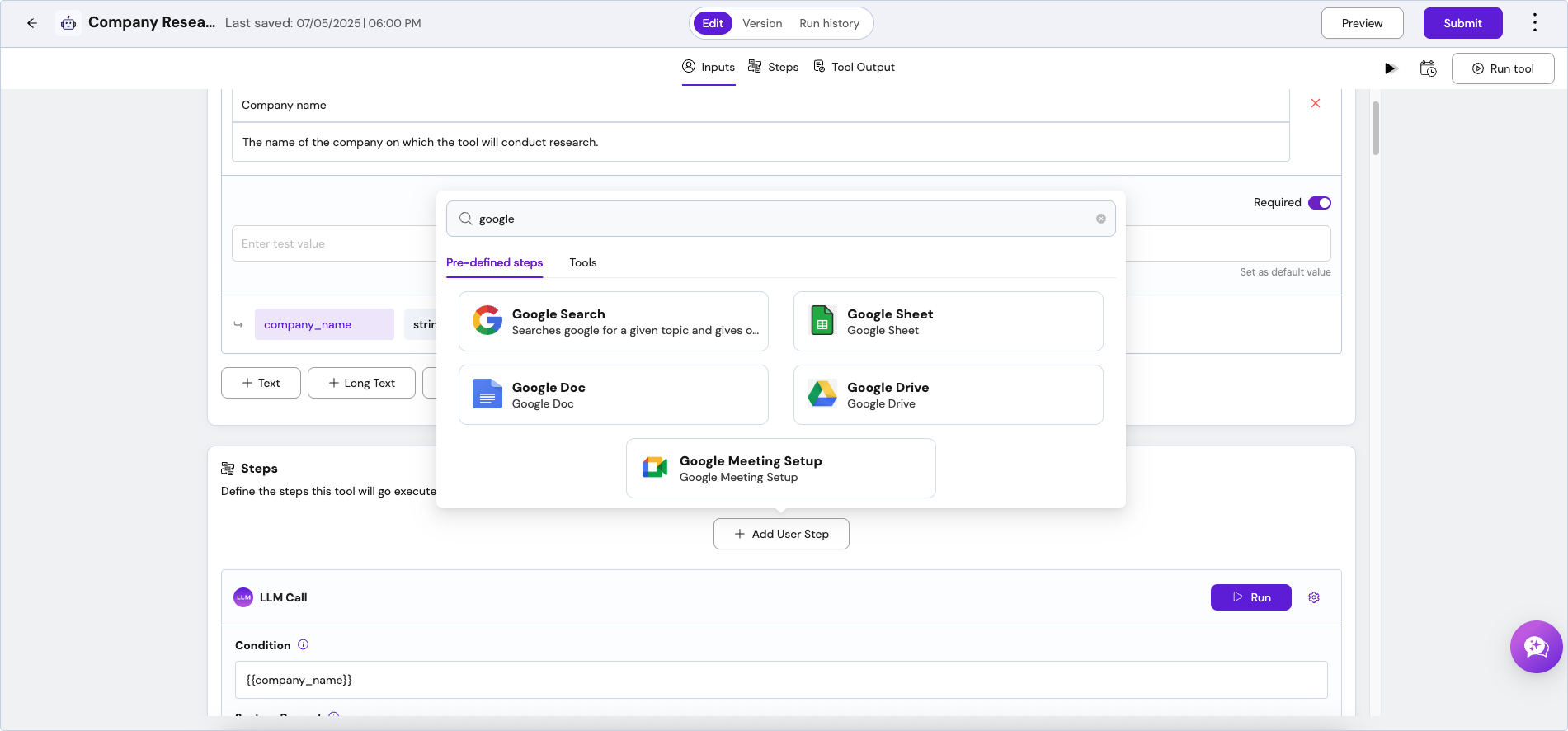

🔗 Google Connectors (Docs, Sheets, Drive)

Pre-built connectors are now available to pull data directly from your Google Drive, Sheets, and Docs. You can fetch, transform, and load this data into your Knowledgebase using a simple workflow—all without code.

🌐 Browsing Tool Step

Enable headless web browsing within your agents using the new Browsing Tool Step. Simply provide a URL and natural language instructions; our browsing agents will navigate and extract information as needed.

🔧 Enhancements

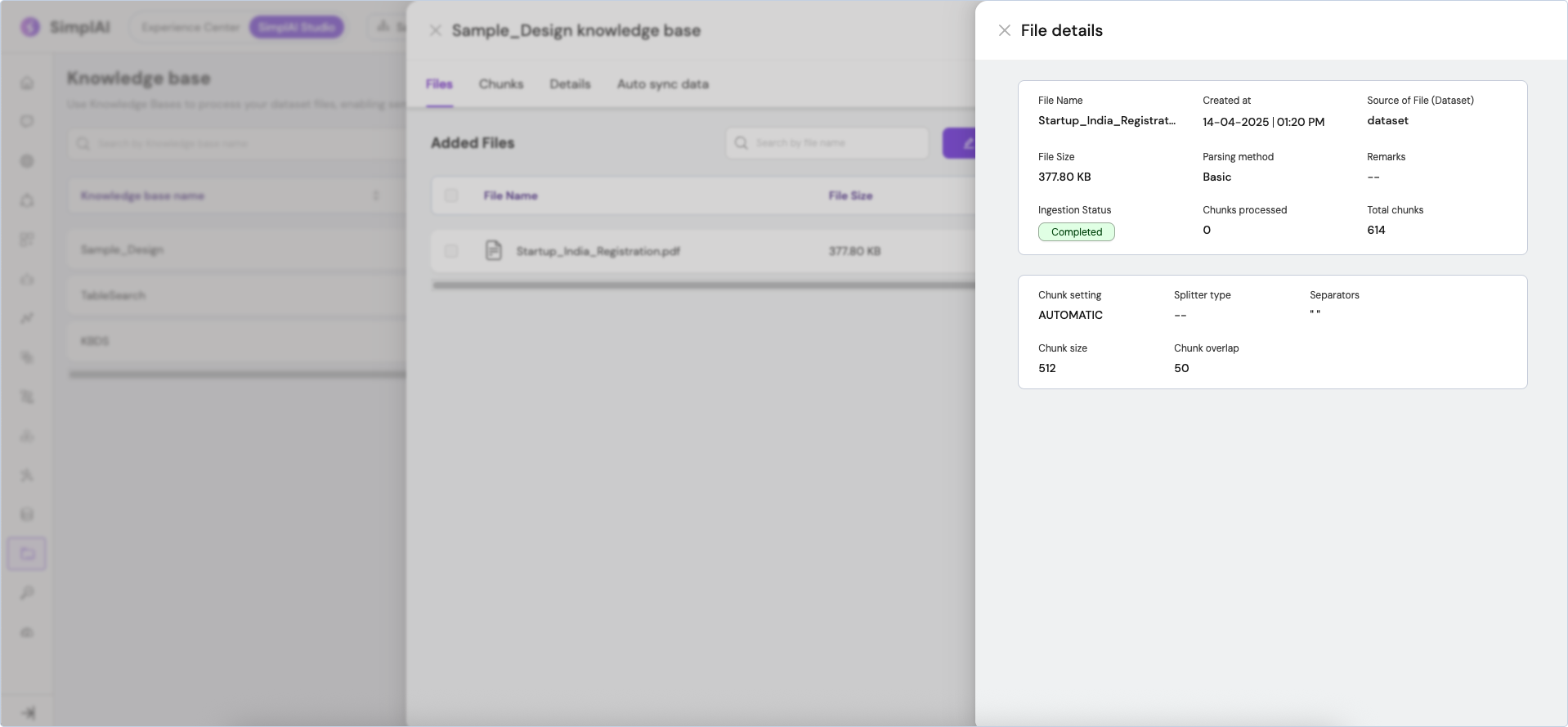

📊 Ingestion Details in Knowledge base

You can now view detailed metadata about each ingestion process—parsing algorithms used, chunking strategy applied, and the total number of chunks processed—directly within the Knowledgebase.

⚡ Fast & Scalable Ingestion

Ingestion performance has been drastically improved via parallel batch processing. Large documents are now parsed and ingested significantly faster, enhancing throughput across the platform.

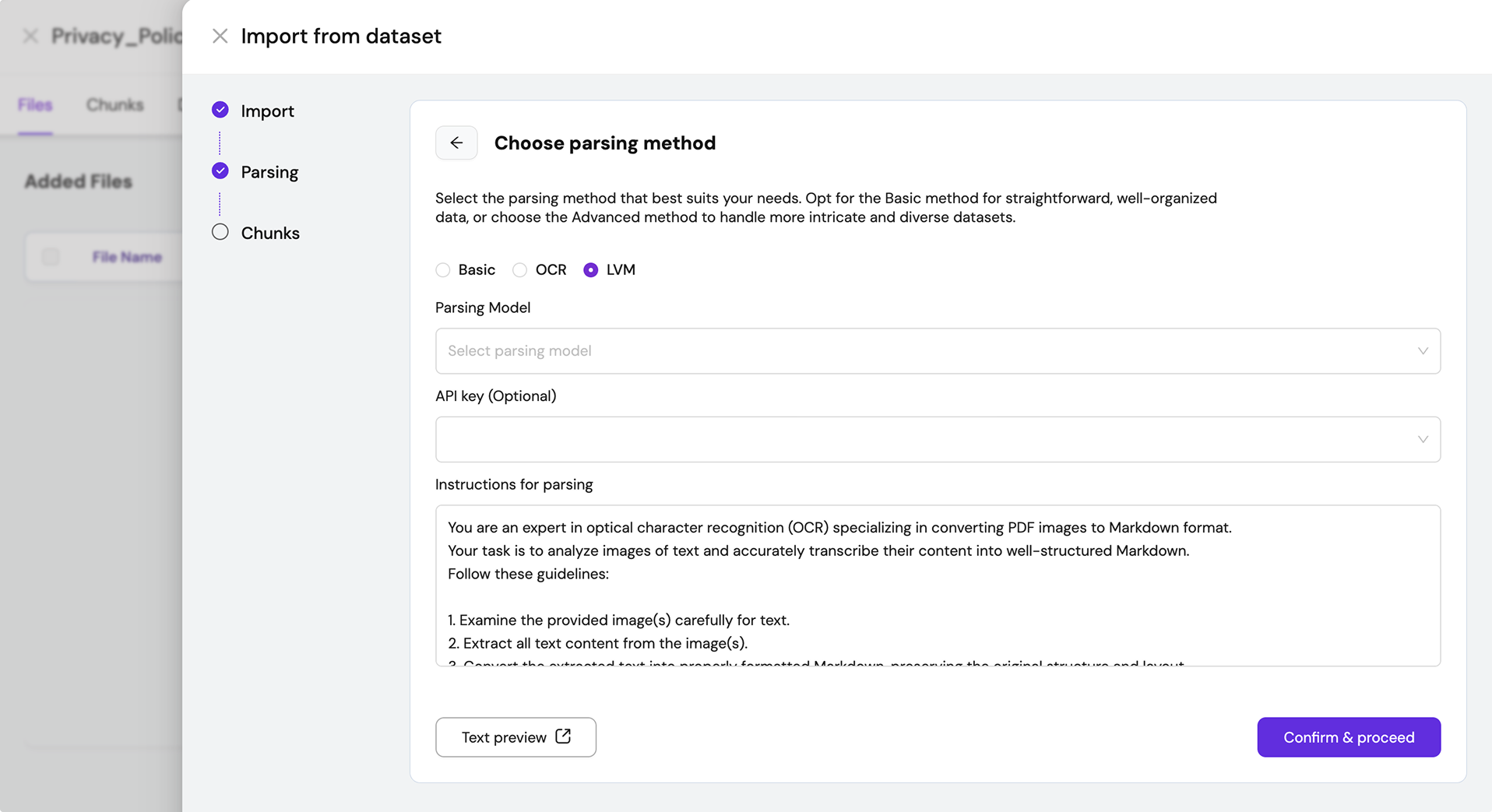

🔍 Parser Update – LVM Parsing (API Key Optional)

Using LVM-based parsers no longer requires manual API key input. If you've already connected LVM models, the platform now detects and uses them automatically—making the setup simpler and more user-friendly.

🚫 Max Tool Runs Limit

You can now define a maximum execution limit for each tool invoked by agents during a single request. This helps prevent runaway processes and gives you more control over agent behavior.

For questions or support, contact us at [email protected]